Today, I am going to review an educational tutorial which was delivered in NIPS 2016 by Prof. Andrew Ng. As far as I know, there is no official video clip available but you can download the lecture slides by searching in internet.

You can see the video with almost identical contents (even the title is exactly the same) in the following link:

* I really recommend you guys to listen to his full lecture. However, watching video takes too much time to get an overview quickly. Here, I summarized what he tried to deliver in his talk. I hope this helps.

** Note that I skipped a few slides or mixed the order to make it easier for me to explain.

Outline

- Trends of Deep Learning (DL)

- Scale is driving DL progress

- Rise of end-to-end learning

- When to and when not to use "End-to-End" learning

- Machine Learning (ML) Strategy (very practical advice)

- How to manage train/dev/test data set and bias/variance

- Basic recipe for ML

- Defining a human level performance of each application is very useful

- Scale is driving DL progress

- Rise of end-to-end learning

- When to and when not to use "End-to-End" learning

- How to manage train/dev/test data set and bias/variance

- Basic recipe for ML

- Defining a human level performance of each application is very useful

TLDR;

Abstract

(from NIPS 2016 website)

How do you get deep learning to work in your business, product, or scientific study? The rise of highly scalable deep learning techniques is changing how you can best approach AI problems. This includes how you define your train/dev/test split, how you organize your data, how you should think through your search among promising model architectures, and even how you might develop new AI-enabled products. In this tutorial, you’ll learn about the emerging best practices in this nascent area. You’ll come away able to better organize your and your team’s work when developing deep learning applications.

Trend #1

Q) Why is Deep Learning working so well NOW?

A) Scale drives DL progress

The red line, which stands for the traditional learning algorithms such as SVM and logistic regression, shows a performance plateau in the big data regime (right-hand side of the x-axis). They did not know what to do with all the data we collected.

For the last ten years, due to the rise of internet, mobile and IOT (internet of things), we could march along the X-axis. Andrew commented that this is the number one reason why the DL algorithm works so well.

So... the implication of this :

To hit the top margin, you need a huge amount of data and a large NN model.

Trend #2

According to Ng, the second major trend is end-to-end learning.

Until recently, a lot of machine learning used real or integer numbers as an output, e.g. 0 or 1 as a class score . In contrast to those, end-to-end learning can give much more complex output than numbers, e.g. image captioning.

It is called "end-to-end" because the input and output of the system are directly linked by a neural network unlike traditional models which have several intermediate steps. This works well in many cases that are not effective while using traditional models. For example, end-to-end learning shows a better performance in speech recognition tasks:

While presenting this slide, he introduced the following anecdote:

"This end-to-end story really upset many people. I used to get around and say that I believe "phonemes" are the fantasy of the linguists and machines can do well without them. One day at the meeting in Stanford a linguist yelled at me in public for saying that. Well...we turned out to be right."

This story seems to say that end-to-end learning is a magic key for any application but rather he warned the audience that they should be careful while applying the model to their problems.

Despite all the excitements about end-to-end learning, he does not think that this end-to-end learning is the solution for every application.

It works well in "some" cases but it does not in many others as well. For example, given the safety-critical requirement of autonomous driving and thus the need for extremely high levels of accuracy, a pure end-to-end approach is still challenging to get to work for autonomous driving.

In addition to this, he also commented that even though DL can almost always train a mapping from X to Y with a reasonable amount of data and you may publish a paper about it, it does not mean that using DL is actually a good idea, e.g. medical diagnosis or imaging.

End-to-End works only when you have enough (x,y) data to learn function of needed level of complexity.I totally agree with the above point that we should not naively rely on the learning capability of the neural network. We should exploit all the power and knowledge of hand-designed or carefully chosen features which we already have.

In the same context, however, I have a slightly different point of view in "phonemes". I think that this can and should be also used as an additional feature in parallel which can reduce the labor of the neural network.

Machine Learning Strategy

Now let's move on to the next phase of his lecture. Here, he tries to give a glimpse of answer or guideline to the following issues:

- Often you will have a lot of ideas for how to improve an AI system, what will you do?

- Good strategy will help avoid months of wasted effort. Then, what is it?

I think this part is a gist of his lecture. I really liked his practical tips all of which can be actually applied in my situations right away.

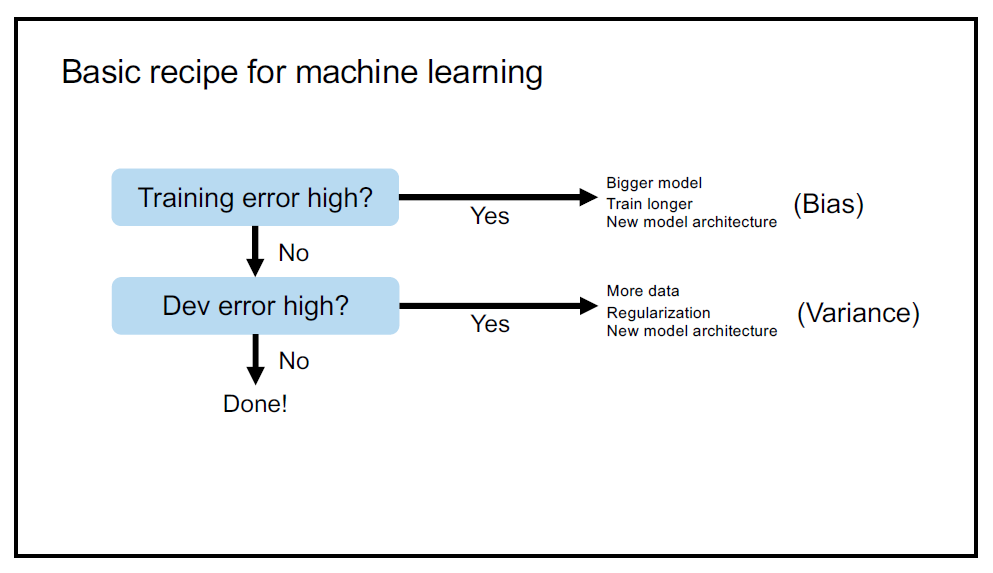

One of those tips he proposed is a kind of "standard workflow" which guides you while training the model:

When the training error is high, this implies that the bias between the output of your model and the real data is too big. To mitigate this issue, you need to

- train longer,

- use bigger model

- or adopt a new one.

Next, you should check whether your dev error is high or not. If it is, you need

- more data,

- regularization

- or use a new model architecture.

* Yes I know, this seems too obvious. Still, I want to mention that everything seems simple once it is organized under an unified system. Constructing an implicit know-how to an explicit framework is not an easy task.

Here, you should be careful with the implication of the keywords, bias and variance. In his talk, bias and variance have slightly different meanings than textbook definitions (so we do not try to trade off between both entities) although they share a similar concept.

In the era before DL, people used to trade off between the bias and variance by playing with regularization and this coupling was not able to overcome because they were tied too strongly.

Nowadays, however, the coupling between these two seems like to become weaker than before because you can deal with both separately by using simple strategies, i.e. use bigger model (bias) and gather more data (variance).

This also implicitly shows the reason why DL seems to be more applicable to various problems than the traditional learning models. By using DL, there are at least two ways to solve the problems which we are stuck in real life situations as mentioned above.

Use Human Level Error as a Reference

To know whether your error is high or low, you need a reference. Andrew suggests to use a human level error as an optimal error, or Bayes error . He strongly recommended to find the number before going deep in research because this is the very critical component to guide your next step.

Let's say our goal is to build a human level speech system using DL. What we usually do with our data set is to split them with three sets; train, dev(val) and test. Then, the gaps between these errors may occur as below:

You can see the gaps between the errors are named as bias and variance. If you take time and think a while, you will find that it is quite intuitive why he named the gap between human level error and training set error as bias and the other as variance.

If you find that your model has a high bias and a low variance, try to find a new model architecture or simply increase the capacity of the model. On the other hand, if you have a low bias but a high variance, you would be better to try gathering more data as an easy remedy.

To see this more clearly, let's say you have the following results:

- Train error : 8%

- Dev error : 10%

If I were to tell you that human level error for such a task is of the order of 1%, you will immediately notice that this is the bias issue. On the other hand, if I told you that human level error is around 7.5%, this would be now more like a variance problem. Then you would be better to focus your efforts on the methods such as data synthesis or gathering the data more similar to the test.

As you can see, just because you use a human level performance as a baseline, you can always have guidelines where to focus on among several options you may have.

Note that he did not say it is "easy" to train a big model or to gather a huge amount of data. What he tries to deliver here is that at least you have an "easy option to try" even though you are not an expert in this area. You know... building a new model architecture which actually works is not a trivial task even for the experts.

Still, there remains some unavoidable issues you need to overcome.

Data Crisis

To deal with a finite amount of data to efficiently train the model, you need to carefully manipulate the data set or find a way to get more data (data synthesis). Here, I will focus on the former which brings more intuitions for practitioners.

Say you want to build a speech recognition system for a new in-car rearview mirror product. You have 50,000 hours of general speech data and 10 hours of in-car data. How would you split your data?

This is a BAD way to do it:

Having a mismatched dev and test distributions is not a good idea. You may spend months optimizing for dev set performance only to find it does not work well on the test set.

So the following is his suggestion to do better:

A few remarks

While the performance is worse than humans, there are many good ways to progress;

- error analysis

- estimate bias/variance

- etc.

After surpassing the human performance or at least near the point, however, what you usually observe is that the progress becomes slow and almost gets stuck. There can be several reasons for that such as:

- Label is made by human (so the limit lies here)

- Maybe the human level error is close to the optimal error (Bayes error, theoretical limit)

What you can do here is to find a subset of data that still works worse than human and make the model do better.

Andrew ended the presentation with emphasizing two ways one can improve his/her skills in the field of deep learning;

practice, practice, practice and do the dirty work

(read a lot of papers and try to replicate the results).

which happen to be exactly a match with my headline of blog. I am glad that he and I share a similar point of view:

READ A LOT, THINK IN PICTURES, CODE IT, VISUALIZE MORE!

I hope you enjoyed my summary. Thank you for reading :)

정리해주신 글 잘 보았습니다. 그런데 train-dev 와 dev으로 나누어서 학습해보는 이유를 설명해주실 수 있으신가요?

답글삭제아주 예전에는 train으로 모델 픽스, dev로 파라메터 픽스, train으로 최종평가 이렇게 3 스텝으로 나눴던게 일반적이어서요. 아시면 답변좀 부탁드립니다.

안녕하세요 아마도 마지막 두 슬라이드에서 나온 것을 보고 얘기해주신 것 같네요. 댓글에서도 말씀해주셨듯이 일반적인 상황이랑 정확히 같은 얘기입니다. 다만 슬라이드에서 표현을 저렇게 한 것인데 저도 사실 슬라이드 표현이 조금 헷갈리게 해두었다고 생각합니다. 아마 직접 들으시면 그리 어렵지 않은 부분이란 것을 금방 캐치하실 것 같습니다.

삭제여기서 저렇게 표현한 이유는 Dev set과 test set의 distribution을 비슷하게 하고자 원래 train-dev-test 이렇게 세 개로 나눈 경우를 기반으로 dev과 test를 조금 섞어서(예를 들면 반반) train-TRAINDEV(dev/2, test/2)-TEST(dev/2, test/2) 이런식으로 해야한다고 얘기하려던 것으로 알고 있습니다. 즉 슬라이드 표현이 좀 이상하죠. 원래 3개로 나누는 방식에 dev는 보통 train에서 일부를 떼어 만드는데 그러지 말고 그렇게 만든 dev에 test로 사용하였던 부분도 조금 같이 합쳐서 새로운 dev를 만들되 지금 저 슬라이드는 이 새로운 dev의 이름을 왜인지는 모르겠으나 "train-dev"라고 해두었네요. 영상을 안 보고 슬라이드만 보시면 헷갈리시는 것이 당연합니다.

nice blog

답글삭제thanks for sheering us

Flutter Training in Hyderabad

Hi,

답글삭제Great Article! Your insights are spot on Nuts and bolts of building AI applications using Deep Learning. I especially appreciate your points. You've done your research. Keep up the excellent work! Looking forward to reading more from you.

Here is sharing NetSuite Functional Online Training related stuff that may be helpful to you.

NetSuite Functional Training

I truly appreciate your efforts in sharing this. This website is fantastic, filled with a wealth of helpful and insightful information.

답글삭제Dental Implant Treatment in Nizampet

Hi,

답글삭제Great Article! Your insights are spot on Jaejun Yoo's Playground. I especially appreciate your points. You've done your research. Keep up the excellent work! I'm looking forward to reading more from you.

Here is sharing Oracle PBCS Training related stuff that may be helpful to you.

oracle pbcstraining